Just wanted to mention a few things I’ve figured out from playing with the camera. You may already know all this, but I wanted to pass it on just in case.

1. Pan and zoom buttons can be held down to increase the amount of the adjustment. The arrow buttons for panning the camera left, right, up, and down, as well as the ‘+’ and ‘-‘ zoom buttons, can be held down, rather than merely clicked. If you hold the mouse button down longer, the amount of the pan, or zoom, continues to increase until you release the button. This is handy for things like scanning across a landscape; until I figured this out I’d just click the pan button to scoot over a little bit, then click again to scoot a little more. Now I can move the rectangle all the way over to a fresh position adjoining the area previously scanned, covering a lot of ground relatively efficiently.

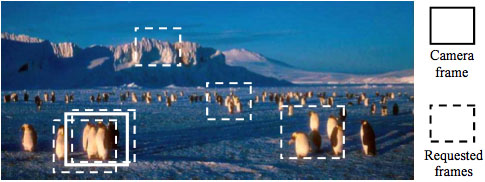

2. The colors of the preview rectangles correlate with the colors of the usernames in the “Online” box. By comparing the color of the rectangles in the positioning panorama with the colors of the listed usernames, you can tell who’s voting to position the camera where. I’m not sure how useful that is, but it lets you grumble more specifically when you’re in a pointing war with someone. “Look at the birdbath!” “No, look at the feeders!” “No, zoom in on the third leaf from the left in that tree over there!” Grr.

3. Pointing is collaborative, rather than via a queue. This leads me to my final item. A lot of the time, it seems like people are taking turns positioning the camera. One person draws a rectangle, there’s a pause, and the camera goes there. Someone else draws a rectangle, pause, and the camera goes there. It feels like we’re taking turns, and as long as only one person is drawing a rectangle between successive “beats” of the system, that is indeed what’s happening.

But the pointing algorithm is more sophisticated than that. I spent some time today reading through the PDFs in the “Related Publications” section of the CONE site, and although I got lost in the math pretty quickly, the gist of it is that when multiple pointing requests are received during the same beat, the software controlling the camera does its best to figure out the pan and zoom that will make the largest number of users happy.

It’s not just an average of all the inputs; that would be stupid, since one person trying to look at the feeders and another trying to look at the birdbath would result in the camera pointing to no-man’s-land in between. And it’s not just a rectangle that encompasses as many of the users’ rectangles as possible, since that would likewise be stupid: Users are requesting a particular zoom factor for a reason, and giving a zoomed-out view that encompasses two zoomed-in requests isn’t going to make either user happy.

Instead, the camera says, okay; how can I make the largest number of users happy? Then it does that.

(From Dezhen Song and Ken Goldberg’s Networked Robotic Cameras for Collaborative Observation of Natural Environments — 480K PDF file.)

The cool thing about this is approach is how well it scales. With a queue, having too many users seriously degrades the experience. But with this approach, there’s actually the possibility that the user experience will get better as the number of users increases. It’s that wisdom of crowds thing. Individually we’re stupid, but collectively we can be pretty smart, and having a means of polling everyone in real-time means the dummies get out-voted.

In practical terms, what this means for me is that I shouldn’t treat camera pointing as a queue, at least not when it’s important. Most of the time I’m happy to let someone else drive. But when there’s something specifically going on that I really want to see, I should be in there drawing rectangles for all I’m worth. And the rest of you should be, too. Don’t wait for your “turn”. Just go for it, and let the software figure it out.